Apr 1, 2025

Beyond the Controller - Why Hand Interaction Matters in XR

Introduction

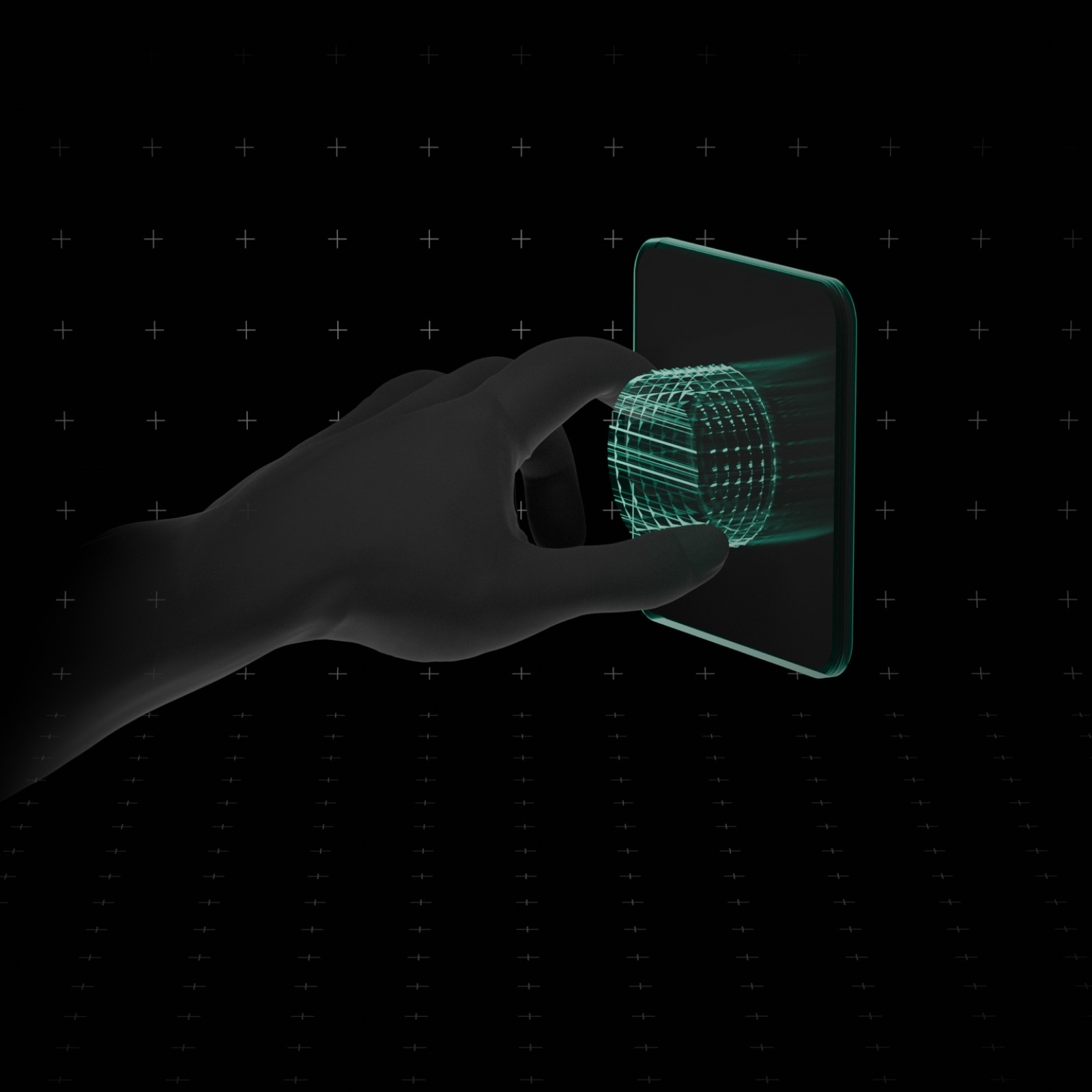

Every day, we witness huge improvements across all segments of Extended Reality - Virtual Reality, Augmented Reality, and Mixed Reality. Graphics are advancing rapidly, experiences are becoming more immersive, and XR streaming is removing infrastructure constraints. But one area often lags behind: interaction. Without natural hand input, the experience remains incomplete.

The Limitations of Controller-Based Interaction

How would you interact with your real-world surroundings? Would you use a controller to grab a cup of coffee, cook, or write a letter? Probably not. Similarly, in XR, using controllers feels unnatural for many tasks, whether at work or in leisure. Controllers require additional training, feel counterintuitive, and rely on external hardware.

To make XR as realistic, productive, and engaging as possible, we must prioritize natural hand interaction. It also reduces cost and increases scalability - no extra hardware needed.

Why Hand Interaction is the Future

Natural hand interaction is the last major barrier between users and truly immersive XR. If the visuals are already realistic and fluid, as they are on many modern devices, what's missing is natural input.

Removing the controller opens new possibilities, some of which are only feasible with hand tracking. Physical rehabilitation, for instance, or command center interfaces where speed and precision are critical. In such contexts, controller-based interaction just doesn’t cut it.

How to Distinguish “Good” and “Bad” Interaction Tools in XR?

Choosing hand interaction isn’t enough. Not all solutions are equal. When evaluating options, consider:

Precision: Can it track all fingers and subtle gestures?

Responsiveness: Does it offer low latency and smooth motion?

Flexibility: Can it support UI taps, object grabbing, and dynamic gestures?

How Our OctoXR Hand Interaction SDK Approaches the Problem

We built OctoXR on Unity - one of the most versatile engines for XR - to give developers and designers a flexible foundation. Unreal support is also on the way.

Key features include:

No-code, low-code, full-code support: Whether you're a designer or an advanced developer, choose your depth.

Physics-based object manipulation: Realistic interaction powered by industry-leading physics.

Gesture recognition: Static and dynamic gestures are already supported; micro gestures are coming in 2025.

UI support: Direct touch, distance pinch, and virtual keyboards - usable even outside XR (e.g., for IoT apps).

Locomotion systems: Let users move naturally or teleport in sci-fi fashion.

Conclusion

Natural hand interaction in XR isn’t just a UX improvement - it’s a strategic shift. As the industry evolves, the most impactful XR experiences will be those that feel less like technology and more like reality. Hands are the most natural tool we have - OctoXR just brings them into the virtual world.